DIGITAL HUMAN - BEHIND THE SCENES

developer diary and the making of the digital human

DIGITAL HUMAN - BEHIND THE SCENES

developer diary and the making of the digital human

From Concept to Showcase

Digital Human - Behind the Scenes

Our vision was to create the most realistic 1:1 copy of a human being possible. Starting with high-resolution scanned geometry and texture, up to motion-tracked animations. Creating a hyperreal copy of a real human actor was our main challenge. Join us and learn more about the story behind the Digital Human Project and how it all started.

Check out our behind the scenes video - Enjoy!

Setting our goals

Redefining Digital Humans

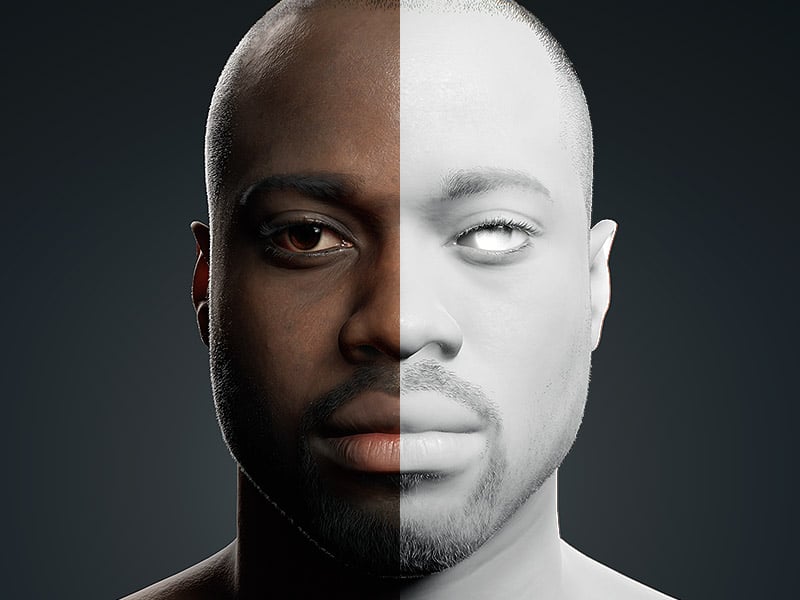

The Digital Human is an interactive and human interface between humans and computers. Renderpeople created this project to make a fundamental contribution to further improve this technology for the future. Our expectations and aspiration for this project were to create an entirely new quality standard compared to our existing static product range. It was essential for us to create as photorealistic and lifelike a copy of a human and optimize it to even function in real-time applications. The goal was to exhaust all the possibilities of our photogrammetry scanner and use all the data available to create an entirely new level of detail.

Outlining the concept

Focussing on what makes Humans real

Initially, we focussed on creating a photorealistic human head, which should work in real-time in the Unreal Engine. The entire body should be able to mimic all-natural movements so that our 3D actor is capable of doing all expressions needed for the showcase. It was a huge challenge for the whole team, both organizationally, logistically, and of course our 3D artists. The challenge starts with the planning, implementation, and pre-production, continuing with the scanning process and the operation of our photogrammetry scanner, and ends with the post-processing of the individual scans.

Next level photoscanning

Capturing all details

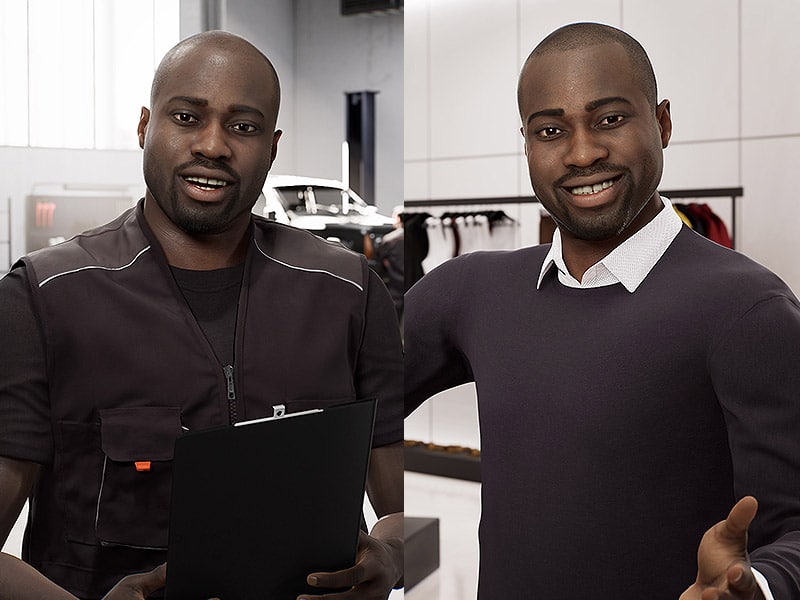

We used our photogrammetry scanner to digitally capture Fred, which has over 300 precisely aligned DSLR cameras. In addition, we have integrated a dedicated facial scan area, which captures even the smallest pores of the face. Through our high-resolution 3D scans, we can capture even the most delicate details and make them usable for our Digital Human. The result of our scan session is a set of highly detailed 3D meshes with 16K textures, from which we can create a millimeter accurate 1:1 copy of the human.

Replicating true emotion

Recording Visemes and Expressions

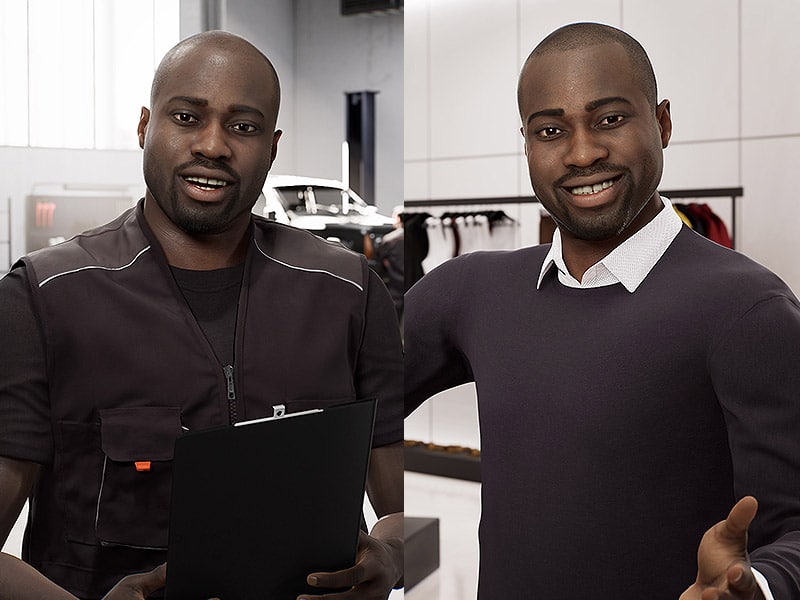

We recorded countless phonemes and various poses of our model Fred, trying to digitize muscles, textures, and motion sequences as true-to-life as possible so that we can replicate his genuine emotions and his real-life acting in 3D. Covering a wide range of motion and all deformations for the different facial muscle areas was key to creating a hyperrealistic face rig with realistic blend shapes.

Digitizing all movements

Motion Capturing Session

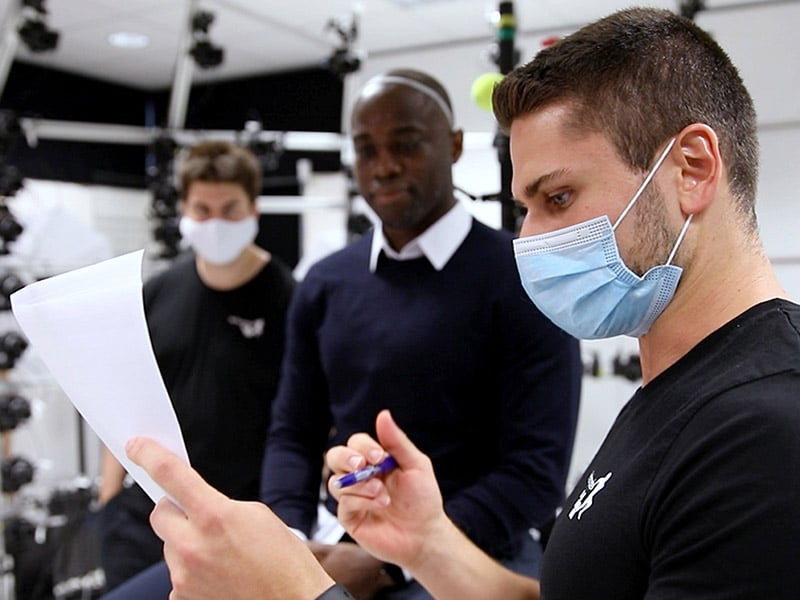

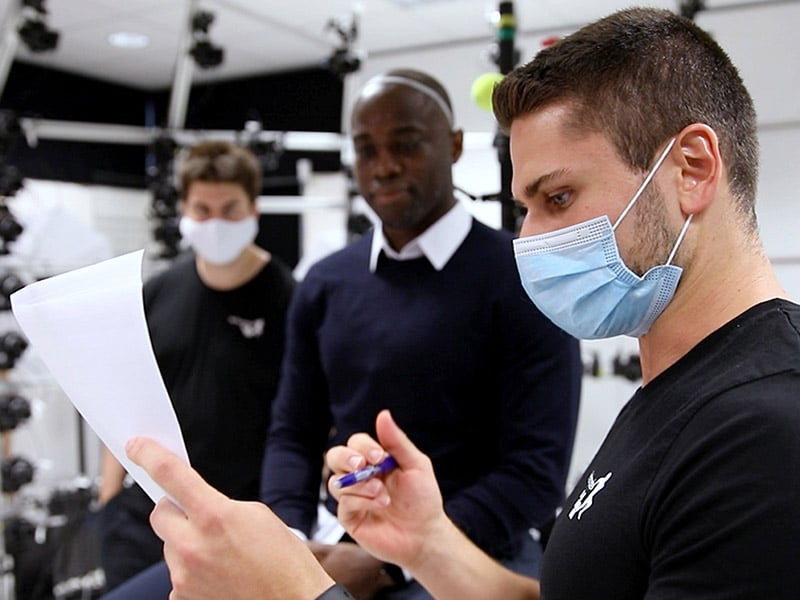

As with professional film productions, we shot many takes to be able to pick out the best footage for the actual showcase. We picked the most realistic and appropriate scene out of many motion shots for our setting. We experimented with different tones and voices, and various facial expressions. Creative freedom was essential for us to get the most authentic performance possible. We used the Xsens MVN Link suit to record the body movements. Fred's movement was recorded locally with our high-performance motion capture system.

Enhancing detail

Post-Processing Workflow

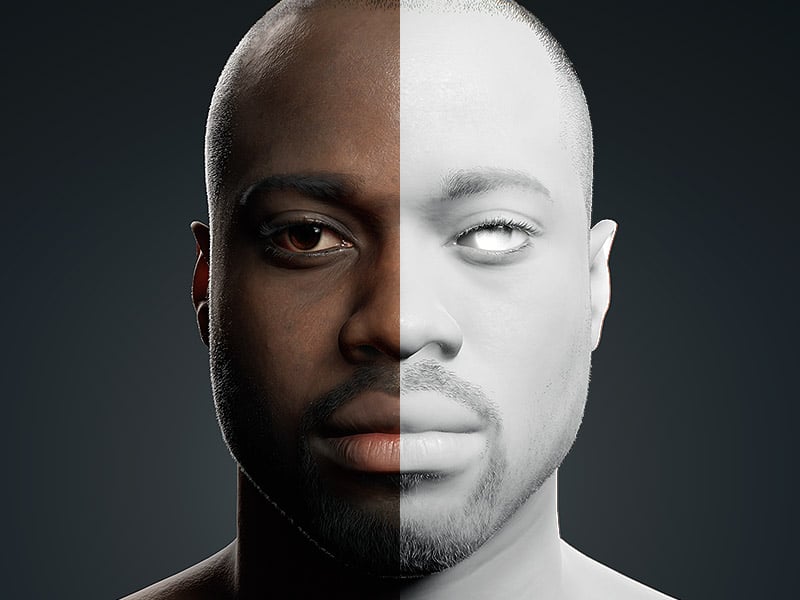

The captured raw scans were manually cleaned and tidied up in ZBrush after processing in Reality Capture. We import the resulting low-poly model back into Z-Brush to have enough resolution and detail for further refinements. By projecting the base mesh onto the high-res scan, we can preserve a lot of detail that would have been lost in the reduction process. We defined extreme poses as facial expressions that depict an emotion or facial expression to the maximum. These poses serve us as an anatomical framework for the resulting blend shapes.

Creating the showcase

Unreal Engine 4 Setup

We chose Unreal Engine 4 as the program of choice for our showcase. The integrated interfaces, tools, and excellent possibilities for real-time rendering are perfect for our use case. So we to created our Digital Human scene and character setup directly in the Unreal Engine. All meshes, textures, skeletons/rigs, and animations are combined and unified to create the final Digital Human model. The animation, material, and blend shape setups we used allowed us to make changes on the fly to get the best out of our Digital Human model and rig.

Final presentation

MEET THE DIGITAL HUMAN

We are constantly interacting with other human beings, no matter where we go. Interacting with virtual doubles and digital assistants gets more and more usual. As a result, the next logical step is to create more realistic Digital Humans who can interact with you.

From Concept to Showcase

Digital Human - Behind the Scenes

Our vision was to create the most realistic 1:1 copy of a human being possible. Starting with high-resolution scanned geometry and texture, up to motion-tracked animations. Creating a hyperreal copy of a real human actor was our main challenge. Join us and learn more about the story behind the Digital Human Project and how it all started.

Check out our behind the scenes video - Enjoy!

Setting our goals

Redefining Digital Humans

The Digital Human is an interactive and human interface between humans and computers. Renderpeople created this project to make a fundamental contribution to further improve this technology for the future. Our expectations and aspiration for this project were to create an entirely new quality standard compared to our existing static product range. It was essential for us to create as photorealistic and lifelike a copy of a human and optimize it to even function in real-time applications. The goal was to exhaust all the possibilities of our photogrammetry scanner and use all the data available to create an entirely new level of detail.

Outlining the concept

Focussing on what makes Humans real

Initially, we focussed on creating a photorealistic human head, which should work in real-time in the Unreal Engine. The entire body should be able to mimic all-natural movements so that our 3D actor is capable of doing all expressions needed for the showcase. It was a huge challenge for the whole team, both organizationally, logistically, and of course our 3D artists. The challenge starts with the planning, implementation, and pre-production, continuing with the scanning process and the operation of our photogrammetry scanner, and ends with the post-processing of the individual scans.

Next level photoscanning

Capturing all details

We used our photogrammetry scanner to digitally capture Fred, which has over 300 precisely aligned DSLR cameras. In addition, we have integrated a dedicated facial scan area, which captures even the smallest pores of the face. Through our high-resolution 3D scans, we can capture even the most delicate details and make them usable for our Digital Human. The result of our scan session is a set of highly detailed 3D meshes with 16K textures, from which we can create a millimeter accurate 1:1 copy of the human.

Replicating true emotion

Recording Visemes and Expressions

We recorded countless phonemes and various poses of our model Fred, trying to digitize muscles, textures, and motion sequences as true-to-life as possible so that we can replicate his genuine emotions and his real-life acting in 3D. Covering a wide range of motion and all deformations for the different facial muscle areas was key to creating a hyperrealistic face rig with realistic blend shapes.

Digitizing all movements

Motion Capturing Session

As with professional film productions, we shot many takes to be able to pick out the best footage for the actual showcase. We picked the most realistic and appropriate scene out of many motion shots for our setting. We experimented with different tones and voices, and various facial expressions. Creative freedom was essential for us to get the most authentic performance possible. We used the Xsens MVN Link suit to record the body movements. Fred's movement was recorded locally with our high-performance motion capture system.

Enhancing detail

Post-Processing Workflow

The captured raw scans were manually cleaned and tidied up in ZBrush after processing in Reality Capture. We import the resulting low-poly model back into Z-Brush to have enough resolution and detail for further refinements. By projecting the base mesh onto the high-res scan, we can preserve a lot of detail that would have been lost in the reduction process. We defined extreme poses as facial expressions that depict an emotion or facial expression to the maximum. These poses serve us as an anatomical framework for the resulting blend shapes.

Creating the showcase

Unreal Engine 4 Setup

We chose Unreal Engine 4 as the program of choice for our showcase. The integrated interfaces, tools, and excellent possibilities for real-time rendering are perfect for our use case. So we to created our Digital Human scene and character setup directly in the Unreal Engine. All meshes, textures, skeletons/rigs, and animations are combined and unified to create the final Digital Human model. The animation, material, and blend shape setups we used allowed us to make changes on the fly to get the best out of our Digital Human model and rig.

Final presentation

MEET THE DIGITAL HUMAN

We are constantly interacting with other human beings, no matter where we go. Interacting with virtual doubles and digital assistants gets more and more usual. As a result, the next logical step is to create more realistic Digital Humans who can interact with you.

Tech Breakdown:

Creating the Digital Human

Creating a hyperreal copy of a real human actor was the main challenge for our entire team. Therefore, it was our primary goal to capture a hyperreal copy of a real human actor.

Powered by years of experience in creating human 3D models, we focused on making the most detailed Digital Human possible. Get a more technical view of what we did to make our Digital Human.

Tech Breakdown:

Creating the Digital Human

Creating a hyperreal copy of a real human actor was the main challenge for our entire team. Therefore, it was our primary goal to capture a hyperreal copy of a real human actor. Powered by years of experience in creating human 3D models, we focused on making the most detailed Digital Human possible. Get a more technical view of what we did to make our Digital Human.